Section 20.1 Sums of Squares, Once More

¶Our motivational example will be the one we discussed in Section 18.1. Recall that \(r(n)\) denotes the (total) number of ways to represent \(n\) as a sum of squares, so that \(r(3)=0\) but \(r(9)=4\) and \(r(5)=8\text{.}\) Then we saw in Fact 18.2.9, more or less rigorously, that

Subsection 20.1.1 Errors, not just limits

¶As it happens, we can say something far more specific than just this limit. Recall one of the intermediate steps in our proof.

Notice that if I subtract the limit, \(\pi\text{,}\) from the bounds, I can think of this in terms of an error. Using absolute values, we get, for large enough \(n\text{,}\)

where the value of \(C\) is not just \(\pi\sqrt{2}\text{,}\) but something a little bigger because of the \(\frac{1}{2n}\) term.

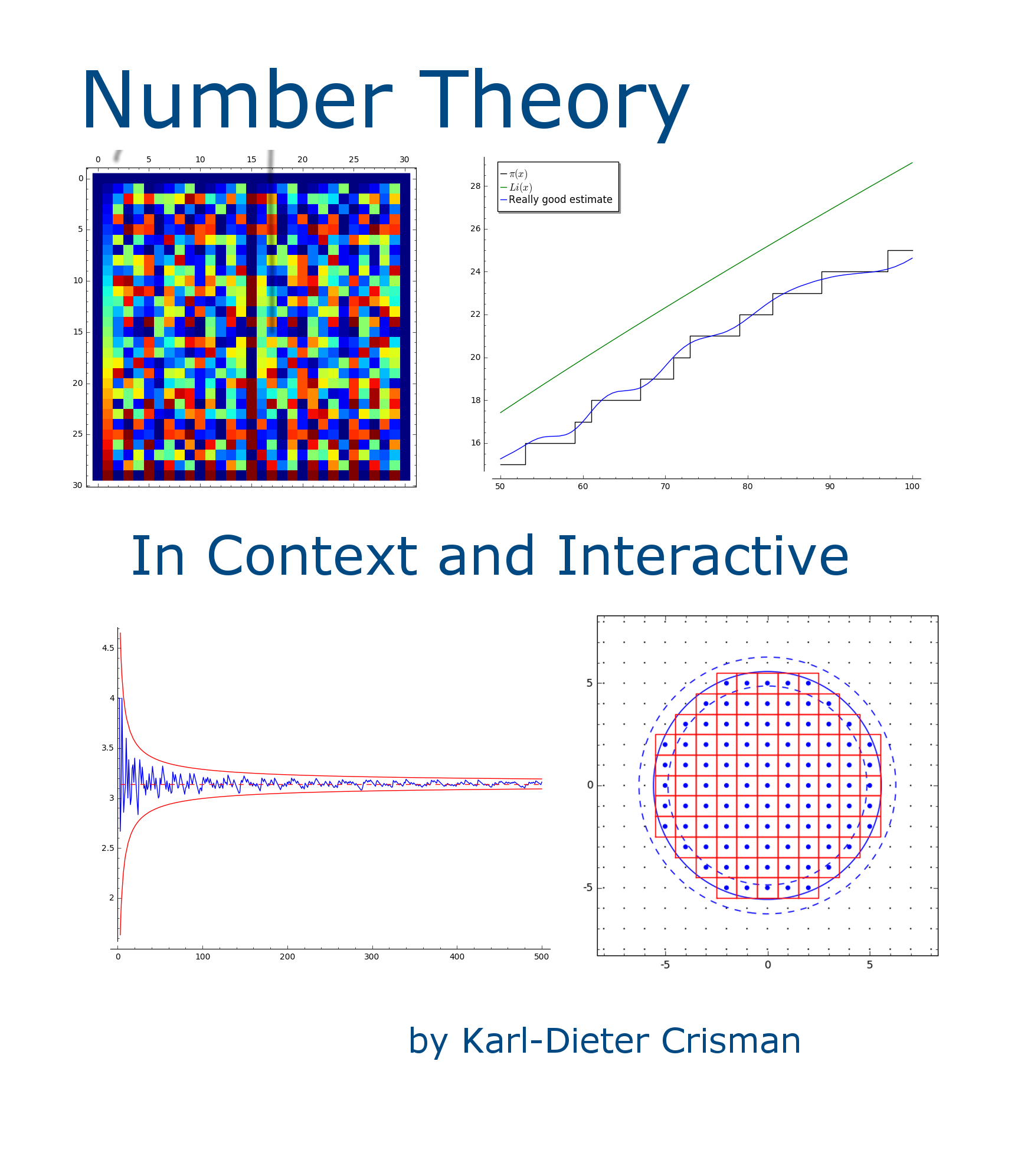

In the next two cells we set up some functions and then plot the actual number of representations compared with the upper and lower bound implied by this analysis. We include a static image at the end, but encourage you to explore.

Note that the actual number is well within the bounding curves given by the red lines, even for small \(n\text{.}\) This shows a general rule of thumb that, typically, the constant we prove will be a lot bigger than necessary. New research is about improving such bounds.

Subsection 20.1.2 Landau notation

¶It turns out there is a nice notation for how ‘big’ an error is.

Definition 20.1.2. Big Oh.

We say that \(f(x)\) is \(O(g(x))\) (“eff of eks is Big Oh of gee of eks”) if there is some positive constant \(C\) and some positive number \(x_0\) for which

This is known as Landau notation.

See Exercise Group 20.6.1–5 for some practice with this. In practice in this text, we will focus on \(C\) and elide details of \(x_0\) unless it is crucial to the narrative.

Example 20.1.3.

The average number of representations of an integer as a sum of squares is \(\pi\text{,}\) and if you do the average up to \(N\text{,}\) then the error will be no worse than some constant times \(1/\sqrt{N}\text{.}\) So the sum's error is Big Oh of \(1/\sqrt{N}\text{,}\) or \(O(x^{-1/2})\text{.}\)

It is unknown in this case just how small the error term really is. In 1906 it was shown that it is \(O(x^{-2/3})\) (note that this is a more accurate statement, see Exercise 20.6.5). See Figure 20.1.4 for a visual representation, where \(C=\pi\text{.}\)

It is also known that the error term is not as close as \(O(x^{-3/4})\text{;}\) see [C.7.25] for much more information at an accessible level.

Now let's apply these ideas to the divisor summation functions \(\tau\) and \(\sigma\) from Definition 19.1.1 in the previous chapter. (We will use these common alternate notations – \(\tau\) for \(\sigma_0\) and \(\sigma\) for \(\sigma_1\) – from Remark 19.1.2 throughout this chapter.) Namely, consider the following interesting question.

Question 20.1.5.

What is the “average” number of divisors of a positive integer? What is the “average” sum of divisors of a positive integer?

It turns out that clever combinations of many ideas from the course as well as calculus ideas will help us solve these questions! We will start with \(\tau\) in Section 20.2, and address \(\sigma\) starting in Section 20.4. Finally, answering these questions will motivate us to ask the (much harder) similar questions one can ask about prime numbers, starting in Chapter 21.